Want faster code reviews? Start tracking key reviewer metrics.

Code reviews often slow down development due to delays like overloaded reviewers or long-lived branches. By monitoring metrics such as response time, reviewer workload, and comment resolution speed, teams can identify bottlenecks and improve efficiency.

Here’s how metrics help:

- First Response Time: Faster PR responses (e.g., within 24 hours) reduce initial delays.

- Workload Balance: Track PR assignments to avoid overburdening reviewers.

- Comment Resolution: Set clear timelines for addressing feedback to speed up iterations.

- Pull Request Size: Keep changes under 400 lines to simplify reviews.

Tools like ReviewNudgeBot automate notifications, track PR progress, and balance workloads, cutting review times by up to 60%. By focusing on these metrics, teams can reduce delays, improve code quality, and maintain productivity.

Key Metrics That Speed Up Reviews

Key review metrics help identify bottlenecks and streamline code reviews. Building on earlier insights into review speed, these metrics focus on areas that can be fine-tuned for better efficiency.

First Response Time

First response time is a critical indicator of review efficiency. Research shows that 80.16% of pull requests receive a first response within 24 hours, 68.36% within a standard 8-hour workday, and 39.78% get a response within just 10 minutes when reviewed on the same day.

Here’s how to improve first response times:

- Automate initial checks to catch simple issues early.

- Dedicate specific time blocks for reviews to ensure consistent attention.

- Enable priority notifications for high-priority changes.

Balancing reviewer workload is another key factor in reducing response delays.

Reviewer Workload Balance

In larger organizations, 67% of developers report waiting more than a week for pull request approvals. Monitoring the number of open reviews assigned to each reviewer can help prevent burnout and maintain speed.

Meta uses a metric called 'P75 Time In Review', which tracks the slowest 25% of pull requests. They’ve implemented notification systems and a method called "Eyeball Time" to measure the actual time spent analyzing reviews, significantly cutting down delays.

"At Google, we optimize for the speed at which a team of developers can produce a product together, as opposed to optimizing for the speed at which an individual developer can write code."

Equally important is how quickly comments are addressed during the review process.

Comment Resolution Speed

The time it takes to resolve comments has a direct impact on overall review duration. Studies indicate that 29% to 63% of the code review lifecycle is spent waiting for accepted changes to be merged.

Here are strategies to improve comment resolution speed:

- Set clear response expectations: Define specific timelines for addressing comments to ensure accountability.

- Track resolution patterns: Monitor how quickly comments are addressed and identify areas where delays occur. Keep in mind that complex discussions, like architectural changes, naturally require more time.

- Leverage automation: Use automated tools to handle repetitive or common issues, allowing reviewers to focus on more critical feedback.

"When measuring and optimizing code velocity, focus on time-to-merge, not time-to-first-response or time-to-accept." – Gunnar Kudrjavets

Using Tools to Track Review Metrics

Automated tools have become essential for teams looking to analyze and improve review metrics. For instance, a Microsoft study revealed that using intelligent notification systems reduced pull request (PR) completion times from 197 hours to just 77 hours - a 60% improvement.

PR Notification Systems

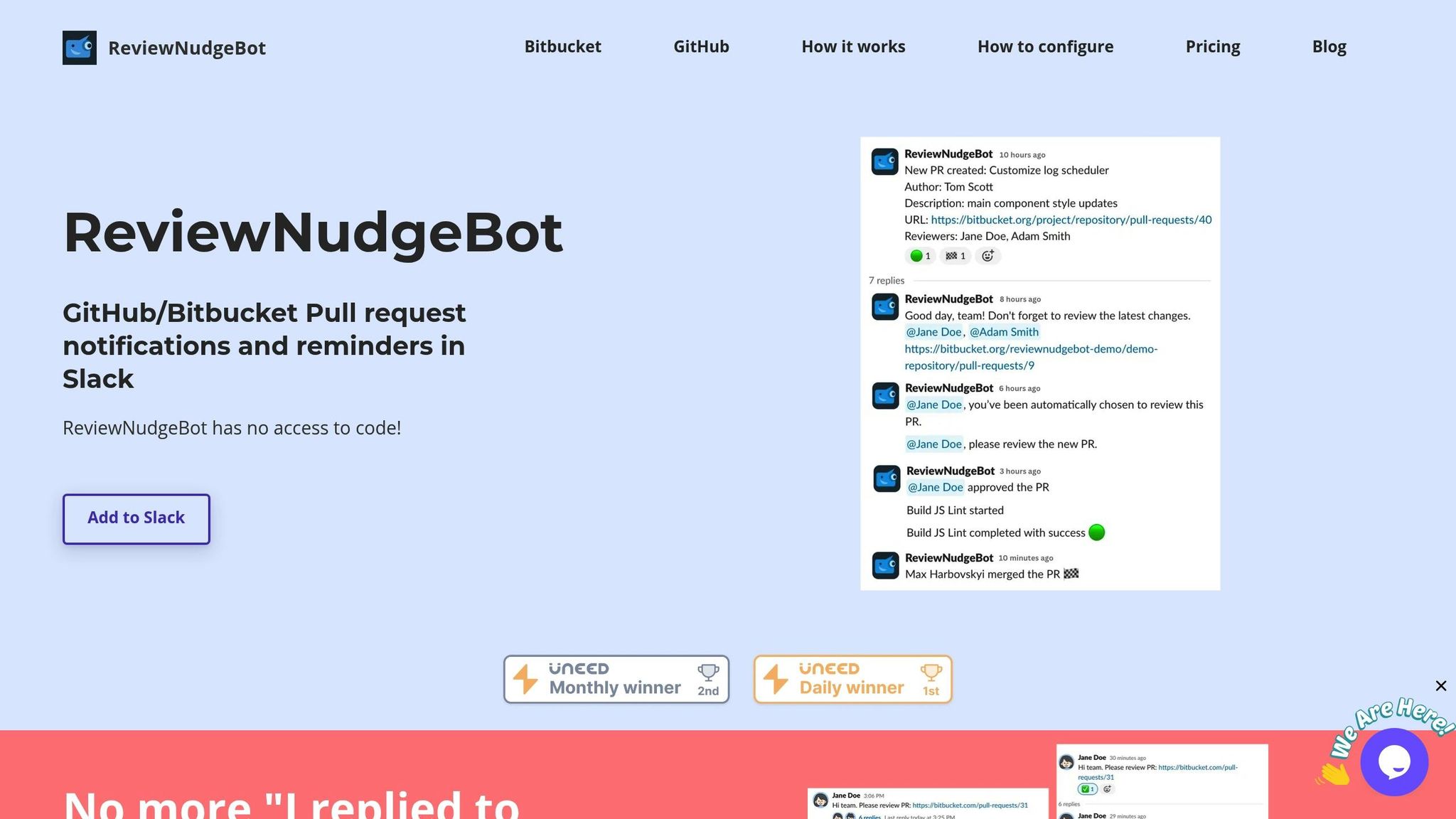

Automated notification systems play a crucial role in keeping review schedules on track by sending timely alerts. Tools like ReviewNudgeBot integrate with Slack to simplify this process, offering features such as:

- Real-time build status updates

- Notifications for comments - whether new, replied-to, or resolved

- Customizable reminders during working hours

- Grouped PR notifications neatly organized within Slack threads

Customizing notification settings helps reduce alert fatigue while ensuring critical updates are never missed. In fact, data shows that 73% of over 210,000 automated reminders were rated as helpful by development teams.

Smart Reviewer Assignment

Automated reviewer assignment systems go a step further by addressing delays in the review process. A joint study by Microsoft and Delft University of Technology analyzed 22,875 pull requests to pinpoint common bottlenecks and create automated solutions.

Here’s what these systems typically offer:

- Fair task distribution to avoid overburdening specific team members

- Automatic escalation for overdue reviews

- Workload balancing to ensure efficiency across the team

- Priority flagging for changes that require immediate attention

PR Progress Tracking

Tracking the progress of pull requests is another key to minimizing delays. Modern tools provide transparency across different review stages, helping teams identify and resolve issues faster. Here’s a breakdown of key metrics and their impact:

| Review Stage | Metrics Tracked | Impact |

|---|---|---|

| Initial Response | Time to first review | Reduces delays in starting reviews |

| Discussion Phase | Comment resolution time | Speeds up iterations |

| Final Approval | Time to merge | Improves overall completion rates |

"ReviewNudgeBot applies strategies from the research paper 'Nudge: Accelerating Overdue Pull Requests toward Completion,' which demonstrated a 60% reduction in PR lifetime across 8,500 teams."

To make PR tracking even more effective, teams can:

- Set up webhook integrations for real-time updates

- Use emoji reactions to indicate PR status

- Monitor build status notifications closely

- Analyze patterns in comment resolution

sbb-itb-7c4ce77

Setting Up Review Metrics

Clear goals, the right tools, and consistent evaluations can significantly improve your team's development workflow and efficiency.

Setting Metric Goals

The first step is to define baseline metrics that align with your team's objectives. Here are some key performance indicators to focus on:

| Metric Type | Target Goal | Impact |

|---|---|---|

| First Response Time | Within 24 hours | Reduces delays in initial review |

| Review Completion | Under 48 hours | Keeps development moving forward |

| Comment Resolution | Same business day | Speeds up iteration cycles |

| Pull Request Size | Under 400 lines | Simplifies and accelerates reviews |

These targets should be customized to fit your team's specific needs and workflows. Once goals are set, use automation tools like ReviewNudgeBot to track and manage these metrics effectively.

"By thoughtfully monitoring code review metrics, teams can continuously enhance their productivity and maintain high-quality standards in their development workflow".

Setting Up ReviewNudgeBot

Follow these steps to integrate ReviewNudgeBot into your team's workflow:

-

Initial Setup

- Add the bot to your Slack workspace.

- Retrieve webhook details from the invite message.

- Configure repository settings in GitHub or Bitbucket.

-

Metric Configuration

- Enable PR lifecycle tracking with status emojis.

- Set up build status notifications.

- Monitor comment activity and resolution rates.

- Automate reviewer assignments to streamline the process.

-

Notification Settings

- Define working hours for reminders.

- Set escalation rules for overdue reviews.

Once configured, tailor notifications and tracking features to align with your team’s existing processes.

Regular Metric Reviews

Consistent monitoring and refinement of review metrics are essential. Schedule weekly reviews to evaluate performance, balance workloads, and identify areas for improvement. Key areas to assess include throughput, comment resolution rates, and the effectiveness of automation tools.

Pro Tip: Use ReviewNudgeBot's grouped Slack notifications to maintain an organized review history, making it easier to analyze trends and spot bottlenecks.

"What you measure is what you'll get." - H. Thomas Johnson

Encourage team members to share their observations after each review cycle. This feedback can highlight recurring challenges and enable real-time adjustments to your strategies.

Conclusion: Better Reviews Through Metrics

Main Points

Using reviewer metrics can significantly improve code reviews. Research shows that data-driven organizations are three times more likely to make better decisions. Top contributors tend to keep just 5% of pull requests (PRs) unreviewed, while the average contributor leaves about 20% unreviewed. Teams that adopt structured review processes report up to 80% fewer bugs after release and 50% quicker onboarding for new members.

"Metrics are essential for determining efficiency in document review. Ultimately, they translate into cost savings for clients. They provide insights into reviewer productivity and accuracy, which are critical for successful reviews."

– Heidi Girod, Undisclosed Title

These findings set the foundation for actionable improvements outlined below.

Getting Started

Focus on these key metrics to refine your review process:

| Focus Area | Target Goal | Expected Outcome |

|---|---|---|

| Response Time | 1.5 hours (leading) | Faster iteration cycles |

| Review Size | Under 400 lines | Higher quality feedback |

| Review Duration | Under 60 minutes | Improved engagement |

| Defect Reduction | Up to 65% | Enhanced code quality |

Start by aligning your team’s efforts with these targets. Tools like ReviewNudgeBot can simplify this process by tracking key metrics automatically. Its PR lifecycle tracking and automated reminders ensure consistent review cycles and offer insights into performance trends.

With the right tools and a dedicated team, you can achieve measurable improvements. Studies have shown that regular code reviews can lead to a 65% reduction in defects.

FAQs

How does ReviewNudgeBot help reduce delays in code reviews?

ReviewNudgeBot streamlines the code review process by automating essential tasks that often cause delays. It sends timely reminders to reviewers about pending pull requests (PRs) and alerts authors when action is required, such as addressing requested changes or fixing failed builds. This helps ensure PRs are handled without unnecessary delays, keeping the workflow smooth and uninterrupted.

By taking over notifications and updates, ReviewNudgeBot helps teams maintain momentum, avoid bottlenecks, and improve the pace of release cycles - all while keeping the process organized and balanced.

What are the best practices for setting up and using reviewer metrics to improve code reviews?

To make the most of reviewer metrics, start by setting clear goals for your code review process. Focus on tracking important metrics like cycle time (how long it takes to finish a review), review throughput (the number of reviews completed in a specific period), and pull request size (smaller PRs are typically quicker and easier to review). These metrics can shed light on how efficient your workflow is.

Streamlining the process with automation can also be a game-changer. For instance, automated reminders and notifications can help ensure feedback is timely and delays are minimized. Tools like Slack-integrated bots are particularly helpful - they can monitor pull request statuses and send updates to keep everyone on the same page. By regularly analyzing these metrics, you can pinpoint bottlenecks and refine your process to improve team productivity and code quality.

Equally important is building a culture of open communication and ongoing feedback. When collaboration and transparency are prioritized, it becomes easier for the entire team to stay aligned and committed to maintaining a smooth and efficient review process.

Why is it important to keep pull requests under 400 lines, and how does this affect the review process?

Keeping pull requests (PRs) under 400 lines is crucial for maintaining an efficient workflow. Smaller PRs are quicker to review, which means faster feedback and fewer delays in the development process. On the other hand, large PRs can overwhelm reviewers, making it harder to spot bugs or provide useful feedback.

By sticking to smaller PRs, teams can boost collaboration, minimize the risk of merge conflicts, and uphold strong code quality. This approach ensures a smoother review process and keeps development cycles moving along at a steady pace.