Code reviews slowing your team down? Meta's experiment with 30,000 engineers shows how small nudges can cut review delays by up to 11.89% without sacrificing quality.

Here’s how they did it:

- Behavioral Nudges: Automated reminders (via tools like Slack) prompt reviewers to act without being intrusive.

- Faster Reviews: Time to first reviewer comment improved by 9.9%, and long-standing pull requests dropped significantly.

- Maintained Quality: Despite faster reviews, thoroughness and quality stayed consistent.

- Tailored Prompts: Nudges were customized based on reviewer habits and relationships, ensuring effectiveness.

Want to speed up your code reviews? The article explains how to implement these strategies using tools like Slack, GitHub, and Bitbucket, while keeping your team productive and engaged.

Key Results from Meta's Code Review Experiment

Meta’s experiment involving 30,000 engineers demonstrated that well-timed nudges can significantly speed up code reviews. The initiative focused on improving three critical areas of the development process while carefully monitoring metrics to ensure faster reviews didn’t compromise quality.

How Nudges Improved Review Speed and Participation

The data showed some impressive outcomes:

- Diffs older than three days dropped by 11.89% (p=0.004), and the number of first reviewer actions increased by 9.9% (p=0.010). This meant fewer pull requests lingering in the queue, which sped up feature delivery and reduced interruptions caused by context switching.

- Diffs marked as "needs review" decreased by 6.8% (p=0.049). While this might seem like a small percentage, across thousands of daily pull requests, the time savings added up, streamlining workflows significantly.

Importantly, guardrail metrics - like "Eyeball Time" (the total time reviewers spent examining a diff) and the percentage of diffs reviewed within 24 hours - remained steady. This stability confirmed that speeding up the process didn’t come at the expense of review quality. These findings highlight how targeted nudges can address bottlenecks without undermining thoroughness.

Main Problems Meta Solved with Nudges

These improvements tackled two major challenges in Meta's workflow: stale pull requests and uneven reviewer workloads. Pull requests often stalled, not because they were particularly difficult, but because they simply got overlooked.

The experiment’s key hypothesis was that NudgeBot could shorten the time a diff spent waiting for review. It achieved this by identifying diffs idle for 24 hours and ranking potential reviewers based on their likelihood to take action.

This approach helped distributed teams overcome visibility issues, where pending reviews could easily get buried under other tasks. By drawing attention to idle diffs, nudges ensured they received timely responses.

Another advantage was balancing workloads. Instead of overloading a small group of reviewers, the system used data to target those most likely to respond quickly and effectively. It allowed reviewers a full day to act on pull requests naturally before sending a gentle reminder, striking a healthy balance between proactive engagement and autonomy.

These results offer valuable insights for anyone looking to adopt similar strategies to streamline their own code review processes.

Setting Up Automated Nudges with Slack and GitHub/Bitbucket

Take a page from Meta's playbook by integrating your development tools with Slack. This setup ensures a smooth flow of updates, keeping code reviews on track without overwhelming your team with constant notifications.

Configuring Slack for Streamlined Code Review Communication

To replicate Meta's efficient approach, start by organizing your Slack workspace. Smaller teams can stick to a single #code-review channel, while larger organizations might benefit from creating separate channels for each repository or feature branch. Pin messages in these channels to outline the review process clearly - include details like expected response times, required information, and steps to follow after approval. This reduces confusion and boosts accountability.

Make the most of Slack's features like @mentions, emoji reactions (👀 for "reviewing", ✅ for "approved", ❌ for "changes needed"), and threaded conversations. These tools help keep discussions focused and make it easy for everyone to track the status of pull requests at a glance.

Connecting GitHub/Bitbucket to Slack

Both GitHub and Bitbucket offer official Slack apps to streamline integration. For Bitbucket Cloud, log into both platforms, go to your repository settings, select Slack > Settings, and click Connect. From there, choose your Slack workspace and configure notifications.

GitHub’s integration is just as straightforward. Use its official Slack app to receive real-time updates on repository activities directly in your Slack channels. Both platforms let you customize alerts and even perform actions like merging pull requests or assigning tasks without leaving Slack.

For private channels, you can use slash commands like /bitbucket connect <repository URL> to ensure security while enabling automated updates. Once connected, fine-tune your notifications to deliver timely, relevant alerts.

Setting Up Effective Automated Notifications

To avoid notification overload, focus alerts on critical branches like main and release candidates, where delays can have the greatest impact. In Bitbucket, navigate to Repository settings > Slack > Settings, then click Add subscription. You can choose to receive notifications for the entire repository or set branch-specific alerts using patterns to include new feature branches automatically.

Limit notifications to key events such as pull request creation, reviewer assignments, build failures, and merge completions. Skip alerts for minor updates or comments to keep the signal-to-noise ratio high.

Consider implementing escalation reminders for pull requests that sit idle for too long. For example, Meta uses a 24-hour threshold to send an initial notification, followed by a second, more urgent reminder after 48 hours. This approach ensures reviews stay on schedule without being overly disruptive.

Additionally, set up keyword-based notifications for terms like "hotfix", "security", or "urgent" to flag high-priority pull requests immediately. This mirrors Meta's strategy for identifying time-sensitive changes.

Finally, give reviewers a reasonable window to respond before nudges kick in. Meta's 24-hour guideline strikes a good balance, allowing reviewers to manage their workload while preventing pull requests from falling through the cracks.

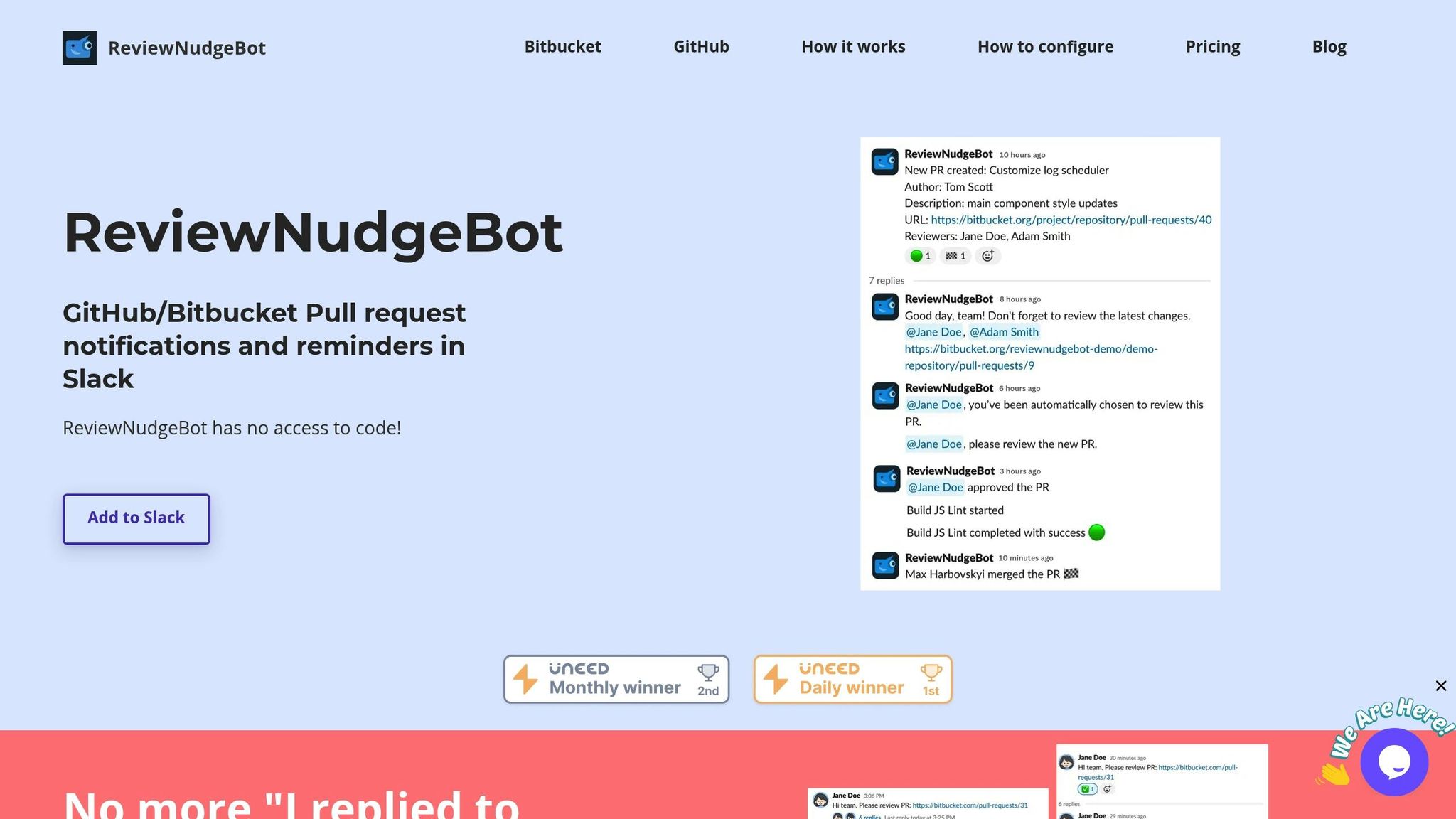

Using ReviewNudgeBot to Speed Up Code Reviews

Inspired by Meta's nudge strategies, ReviewNudgeBot simplifies and accelerates the code review process by automating pull request tracking in Slack. It builds on earlier automated notification setups, integrating them seamlessly to make your reviews smoother and faster.

ReviewNudgeBot's Main Features

ReviewNudgeBot brings the entire pull request workflow into Slack. By connecting your GitHub or Bitbucket repository through a webhook, it automatically tracks pull requests and organizes updates into Slack threads for easy access.

The bot handles reviewer assignments by evenly distributing tasks, notifying specific reviewers or the whole team. It also keeps everyone informed about CI/CD pipeline results, sending alerts to authors if builds fail and notifying reviewers when builds pass, signaling that the pull request is ready for review.

Beyond that, ReviewNudgeBot monitors comments, providing updates on new remarks, replies, and resolved discussions. It sends reminders for pending feedback to keep the process moving forward. All notifications are grouped into threaded conversations, complete with emoji status indicators, offering a quick and clear visual summary.

Benefits for US-Based Distributed Teams

For distributed teams spread across various time zones, ReviewNudgeBot solves common challenges. Research shows it can cut pull request lifetimes by 60% across 8,500 teams, with 73% of over 210,000 reminders rated as helpful. The bot’s customizable reminder schedules ensure notifications reach team members during their working hours, avoiding disruptions outside of work time.

Automatic reviewer assignment further supports distributed teams by notifying available reviewers, ensuring no pull request lingers unattended, regardless of time zone differences.

The Advantage of Automated Nudging

While manually sharing pull requests or using basic Slack integrations might help, ReviewNudgeBot takes things to the next level. By directly integrating with repositories via webhooks, it eliminates the need to manually share PR links. Instead, it tracks updates automatically and organizes related notifications into a single thread. Intelligent escalation and emoji-based status updates ensure pull requests get timely attention, keeping the process efficient and easy to manage.

With these features, ReviewNudgeBot offers a cost-effective way to optimize code reviews. Teams can try it out with a 14-day Pro trial, no credit card required. The Pro Plan starts at $30 per month, with additional teams costing $20 each - an affordable investment compared to the time and productivity saved through faster, more efficient reviews.

sbb-itb-7c4ce77

Code Review Tips for US Distributed Teams

Managing code reviews in distributed teams across the United States comes with its own set of challenges. With team members spread across time zones from the Pacific to the Atlantic, creating efficient review processes requires a mix of clear guidelines and automation.

Building Clear and Accountable Review Processes

Clear expectations are the backbone of effective code reviews. For many companies, the standard is for developers to complete reviews within one business day. This is crucial, as inefficient review processes can cut team productivity by 20–40%, with 44% of teams citing slow reviews as their biggest delivery bottleneck.

Delays can have a ripple effect. When pull requests (PRs) sit unreviewed for over 48 hours, developer engagement drops by 32%. This disengagement often leads to slower responses, creating a vicious cycle. Meta's research highlights that predictable turnaround times can significantly speed up delivery.

One standout example is Full Scale’s approach to same-day reviews across time zones. Their system includes:

- Mandatory video walkthroughs for complex changes

- Standardized PR templates with detailed descriptions

- Clear criteria for acceptance and test coverage

- Assigned primary and secondary reviewers in different time zones

- Defined escalation paths for unresolved issues

This structured process helped them cut review delays, leading to a 40% faster time-to-market for new features.

Accountability plays a key role too. Documenting decisions from each review stage prevents future confusion, especially for debated code sections. Use TODO tags with task numbers to track unresolved issues, ensuring nothing gets overlooked.

During reviews, prioritize critical components like authentication, authorization, and features central to the application’s functionality.

Using Automation for Cross-Time-Zone Teams

Automation is a game-changer for distributed teams, especially when time zones create coordination gaps. Developers lose an average of 5.8 hours per week to inefficient review workflows, much of which stems from delays in communication. Automation helps maintain progress even when team members aren’t working simultaneously.

Automated tools can handle initial checks, ensuring code meets quality standards before it even reaches human reviewers. This minimizes back-and-forth, speeding up the process. Setting clear deadlines for reviews, paired with automation, keeps the workflow moving smoothly.

Scheduling tools can align review cycles across time zones, while intelligent escalation ensures PRs don’t linger. Without such systems, PRs in traditional teams can take an average of 4.4 days to get reviewed.

Automation can also provide reviewers with necessary context upfront, like linking design documents or JIRA tickets. This reduces the need for clarifications that often stretch across multiple time zones, saving time and effort.

Creating a Culture of Quick Code Reviews

Establishing a culture that values timely reviews requires both procedural adjustments and a shift in mindset. Encourage developers to plan reviews during specific times, like early in the day or after breaks, to ensure consistent feedback. This approach minimizes disruptions while keeping the process efficient.

Regularly scheduled review sessions can also help maintain steady feedback. By making reviews a planned part of the workflow instead of an interruption, teams can achieve more predictable turnaround times.

Keep PRs concise to make reviews manageable. If a change involves more than five files, took over a day to draft, or would require lengthy review time, consider breaking it into smaller parts. Smaller PRs are easier to review, especially for distributed teams juggling different time zones.

Active participation is essential, but it’s also important to respect work-life boundaries. Rotating review responsibilities helps distribute the workload and prevents burnout. When team members know their efforts will be reciprocated, they’re more likely to stay engaged.

For extended technical discussions, moving conversations to Slack or similar platforms can be more effective. Real-time exchanges are particularly helpful for complex issues, keeping review threads focused and collaborative.

Finally, emphasize a quality-first mindset. Ensure all team members are familiar with coding standards, security practices, and relevant industry guidelines. This shared understanding makes reviews more consistent and efficient, regardless of who’s handling them.

Pair these cultural practices with automated reminders to keep workflows on track, ensuring that distributed teams stay productive and aligned.

Conclusion: Scale Your Code Reviews with Automation

Meta's experiment with 30,000 engineers shows how combining automation with behavioral nudges can reshape code review workflows when backed by well-integrated systems. By delivering automated prompts through familiar tools like Slack, teams can speed up review times and keep developers engaged. For distributed teams in the U.S., especially those juggling multiple time zones, strategies like Slack integrations and clear accountability measures tackle common causes of delays.

One standout example is ReviewNudgeBot, which applies these principles to simplify and enhance code review processes. It demonstrates how targeted automation can address bottlenecks and improve workflows across teams of any size.

To get started, define a clear strategy and set specific automation goals. Pinpoint the pain points in your current review process, then use automation to reduce friction. Keep refining your approach as you gather insights along the way.

For long-term success, collect team feedback and monitor metrics like review turnaround times and developer engagement. The best teams treat automation as a continuous effort, adjusting workflows to meet changing needs and feedback over time.

FAQs

How can behavioral nudges speed up code reviews while maintaining quality?

Behavioral nudges are a clever way to accelerate code reviews by gently encouraging developers to respond quickly while still maintaining the quality of their work. These subtle cues rely on psychological principles to cut down on delays, prompt timely actions, and ensure the reviews remain thorough.

By reinforcing productive habits and using automated reminders, these nudges help make the review process more efficient without lowering standards. Large-scale experiments have shown that it’s possible to speed up reviews without sacrificing the depth or accuracy of the feedback.

How can teams use Slack with GitHub or Bitbucket to improve code review workflows?

Integrating Slack with GitHub or Bitbucket can make code reviews much more efficient by enabling real-time notifications. These updates keep everyone in the loop about pull request changes, approvals, and comments, helping the team address tasks without delay.

Setting up dedicated Slack channels specifically for code reviews can also improve collaboration. Team members can use mentions or threads to discuss individual pull requests, keeping conversations organized and focused. On top of that, automated reminders and tools with AI-powered capabilities can minimize delays, improve productivity, and ensure that workflows run smoothly - especially for teams working across different locations.

How does ReviewNudgeBot help distributed teams streamline code reviews across time zones?

ReviewNudgeBot makes code reviews easier for teams spread across different time zones by sending customized reminders and notifications based on each team member's working hours. This approach ensures pull requests get the attention they need without unnecessary delays, even in asynchronous setups.

By minimizing hold-ups and promoting timely teamwork, ReviewNudgeBot keeps teams running smoothly and efficiently, regardless of location or time zone.

![Thumbnail for: [Slack App] Add paid features to your bot for Slack](https://unicorn-s3.b-cdn.net/image-ch6zs.png)